Today someone asked on Google+

Hello, when computing the gradients CNN, the weights need to be rotated, Why ?

I had the same question when I was pouring through code back in the day, so I wanted to clear it up for people once and for all.

Simple answer:

This is just a efficient and clean way of writing things for:

Computing the gradient of a valid 2D convolution w.r.t. the inputs.

There is no magic here

Here’s a detailed explanation with visualization!

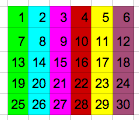

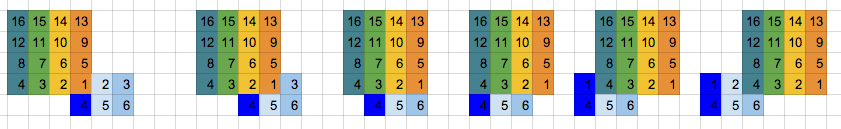

Input

Kernel

Kernel

Output

Output

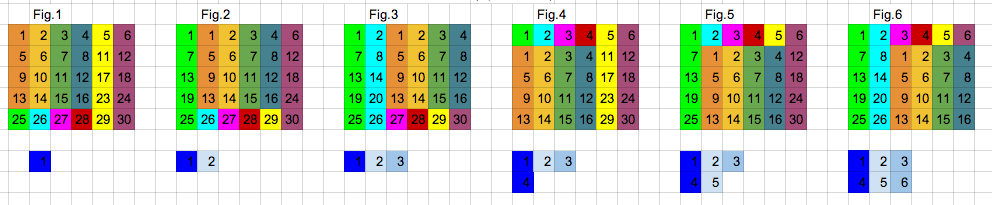

Section 1: valid convolution (input, kernel)

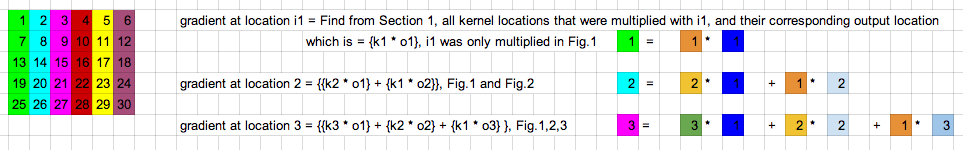

Section 2: gradient w.r.t. input of valid convolution (input, kernel) = weighted contribution of each input location w.r.t. gradient of Output

Section 3: full convolution(180 degree rotated filter, output)

As you can see, the calculation for the first three elements in section 2 is the same as the first three figures in section 3.

Hope this helps!